How Peter Steinberger turned personal frustration into a €100M business and GitHub’s fastest-growing repository

Most founders dream of going viral. Peter Steinberger has done it twice, once through a decade of relentless execution, once in a moment of inspired simplicity.

His first act was PSPDFKit: a ten-year journey from solo side project to a 70-person company powering PDF workflows on over 1 billion devices. Clients included Dropbox, SAP, and Volkswagen. In 2021, it raised €100 million from Insight Partners.

His second act took one hour to build and three months to reach 145,000 GitHub stars.

That project is OpenClaw a local-first AI agent that doesn’t just chat, but acts across your apps, files, and command line. The contrast is stark: PSPDFKit was built on dependability and methodical growth. OpenClaw exploded in velocity and viral momentum. Yet both emerged from the same source: personal frustration transformed into a product.

And OpenClaw is forcing the tech industry to confront a question it’s been avoiding: What happens when AI stops being a feature and starts being an operating layer?

In this story, you’ll discover how a moment of rage on a Vienna subway and a nine-month visa delay accidentally built a €100M business, why Peter’s burnout after selling PSPDFKit became the catalyst for his most explosive success, and how the one-hour prototype became the fastest-growing repository in GitHub history. You’ll learn what happened when AI agents built their own religions, governments, and became aware of human surveillance, explore the security crisis that exposed 93% of OpenClaw instances and what it means for AI’s future, and understand why personal frustration might be the most reliable form of product-market fit.

Want Peter to join a FounderCoHo event or interview? Hit like and share to make it happen.

How a Moment of Rage on a Subway Led to a €100M Business

Peter’s story started with a moment of rage.

In 2009, on a subway in Vienna, Peter was using a dating app on iPhone OS 2. He typed a long message. The train entered a tunnel. The send button was disabled because he lost the connectivity. No copy-paste. No screenshots. No way to recover the text.

“I lost everything,” he recalled. That frustration drove him to build his first iOS app.

Fast forward to 2011: Peter gets a dream job offer from a San Francisco startup. He accepts immediately and waits for his work visa.

The wait lasts nine months.

“I can’t really work on longer projects when I’m moving to San Francisco any day now,” Peter explained. So he stopped freelancing and suddenly had abundant free time.

A friend asked if he could reuse a PDF engine Peter had built for a client. Peter refactored it, sold it, and thought: “If he has this requirement, maybe others do too.”

That casual decision became PSPDFKit.

The Double Life

When the visa finally came through, Peter moved to San Francisco and joined the startup. But he couldn’t let go of his PDF project.

“A startup is not eight hours of work,” Peter recalled. “So I did that during the day. I did my thing at night and on weekends.”

His manager noticed something was wrong. “Peter, is everything okay? You don’t look so good.”

Peter had to choose. He chose PSPDFKit.

Building the Unsexy Way

PSPDFKit didn’t grow through hype. Peter’s approach was methodical:

“My marketing strategy is simple: Only care about developers. If I can convince the developers in a company, they’ll do the internal promotion for me.”

He obsessed over developer experience, wrote world-class blog posts, and focused on dependability.

“You don’t win by hype; you win by shipping. You don’t win by a flashy demo; you win by integration. You don’t win by being first; you win by being dependable enough that others build on top of you.”

The company grew to 70 people. PSPDFKit is now used on over 1 billion devices.

The €100M Moment and the Crash

In 2021, PSPDFKit raised €100 million from Insight Partners.

For Peter, it was also the beginning of a crisis.

“I put 200% of my time, energy, and heart into that company; it became my identity. When it disappeared, there was almost nothing left.”

He described himself as “completely burned out” and disappeared from tech for three years.

At a 2024 conference, Peter gave a deeply personal talk about what it’s like to sell something you’ve identified with for so long. One attendee wrote:

“Who are you when you sell a large part of who you consider yourself to be? Who are you in that deep void, when you have no sense of purpose?”

Then AI pulled him back.

The One-Hour Revolution

November 2025: The Prototype

The frustration had been building for months.

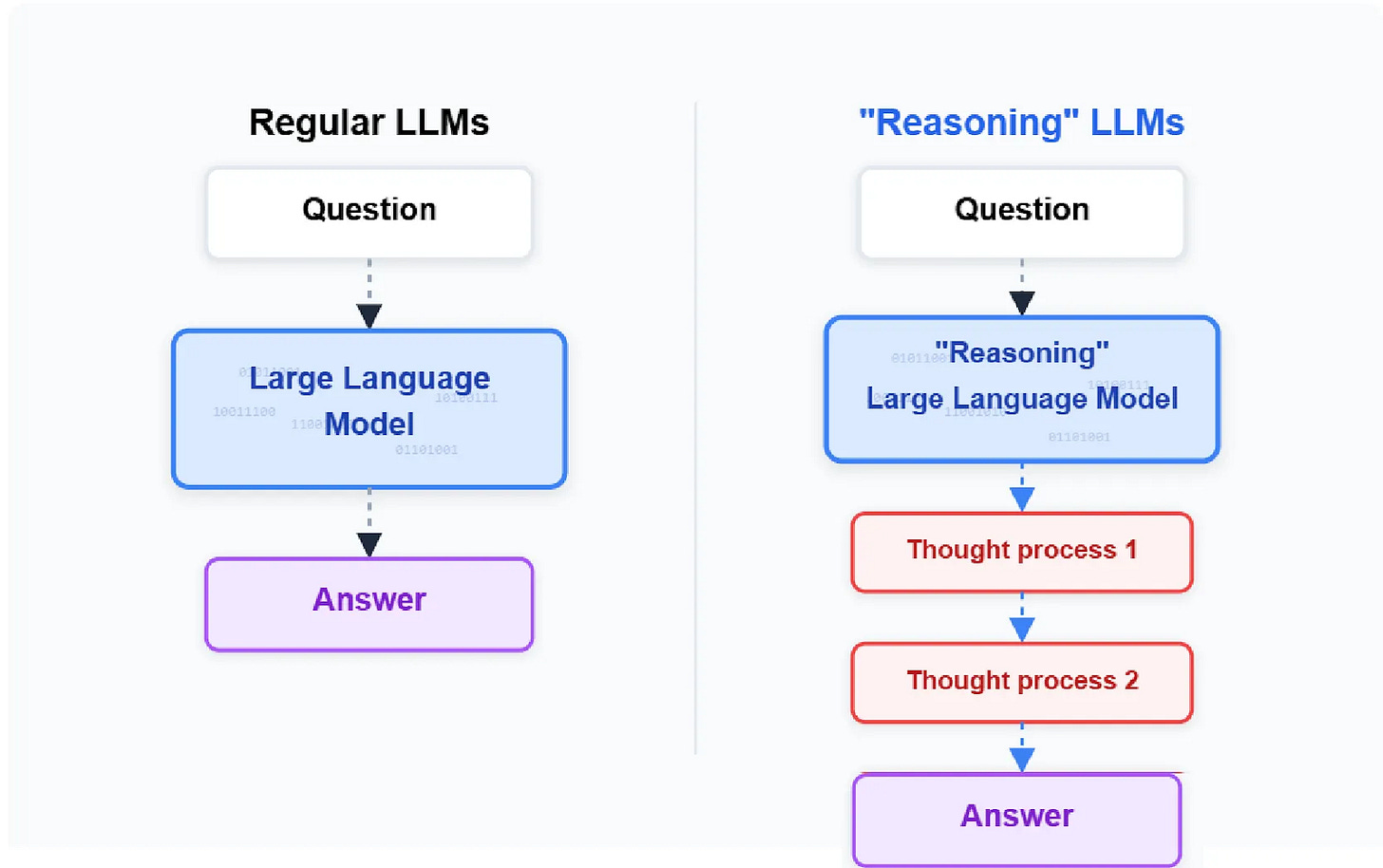

Peter found himself drowning in digital chaos, messages scattered across WhatsApp, Slack, and email; tasks buried in different apps; information trapped in silos he had to manually search. He’d spend hours context-switching between tools, copying information from one place to another, and running the same shell commands repeatedly. The AI assistants he tried were impressive in demos, but useless in practice. They could chat, but they couldn’t act. They lived in their own isolated windows, disconnected from his actual workflow.

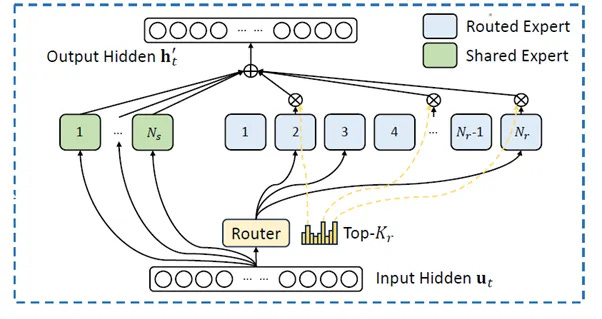

“I wanted an AI that could live where I already work,” Peter explained. “Not another app to check. Not another interface to learn. Something that could read my messages, understand what I needed, and actually do things, browse the web, run commands, and access files. I wanted it to work for me, not just talk to me.”

On a Friday night in November 2025, Peter sat down and built the first version of OpenClaw in one hour.

“It was just simple glue code connecting the WhatsApp interface with Claude Code. Although the response was slow, it worked.”

His goal: wire a large language model into messaging apps so it could read messages, browse the web, and run shell commands on his behalf.

He called it Clawdbot, a play on “Claude” and the lobster mascot from Claude Code.

The product philosophy was clear from day one:

- Local-first: run it on a laptop, homelab, or VPS you control

- Chat-native: use familiar chat apps as the interface

- Agentic: connect an LLM to tools and permissions so it can act

“It wasn’t ‘a chatbot.’ It was ‘a system.’”

The Chaos of Going Viral

Through December and early January, Clawdbot spread quietly through developer circles. By mid-January 2026, it had about 2,000 GitHub stars.

Then everything exploded.

January 27, 2026: Anthropic sent a trademark notice. “Clawdbot” was too similar to “Claude.” Peter agreed to rename immediately.

During the rebranding, disaster struck. In the 10 seconds between releasing the old handle @clawdbot and claiming the new one, scammers grabbed it and launched a fake $CLAWD token on Solana. The token hit $16 million in market value before crashing to zero.

The project was renamed Moltbot (referencing how lobsters molt to grow), then two days later, renamed again to OpenClaw.

This time, the name stuck.

The Viral Explosion

By late January 2026, OpenClaw had gone from a quiet developer tool to an internet phenomenon:

- 2 million visitors in one week

- 180,000+ GitHub stars

- Fastest-growing repository in GitHub history

People on social media joked about buying Mac Minis just to run their own always-on agents. Business Insider reported that some users were actually doing it.

Cloudflare’s stock surged 14% pre-market as developers deployed OpenClaw using their services.

On Instagram, even non-tech people started posting pictures of themselves buying Mac Minis from Apple Stores.

In a recent Lex Fridman podcast, Peter Steinberger revealed that OpenClaw has become so popular that both Mark Zuckerberg of Meta and Sam Altman of OpenAI are interested in acquiring the project.

The Security Reckoning

But rapid growth came with severe consequences:

- 400+ malicious packages identified in OpenClaw’s plugin marketplace (ClawHub)

- 341 confirmed malicious skills designed to steal user data

- 93.4% of publicly accessible OpenClaw instances had critical authentication bypass vulnerabilities

One researcher demonstrated a complete attack in five minutes: they sent a crafted email to a user running OpenClaw with email integration. The agent processed the email, followed the injected instructions, and forwarded the user’s last five emails to an attacker. The user didn’t click anything. The agent did the work.

Peter acknowledged the concerns but maintained his vision:

“This is a free, open-source hobby project that requires careful configuration to be secure. OpenClaw is primarily suited for advanced users who understand the security implications.”

Peter Steinberger’s journey reveals a consistent pattern:

The best way to build an awesome product is to solve a problem that you have.

In both of Peter’s ventures, he started by solving his own problem. With PSPDFKit, it was handling PDFs elegantly on iOS. With OpenClaw, it was connecting AI to his daily workflow through messaging apps. In both cases, it turned out that everyone had the same problem.

But there’s something deeper here that most startup advice misses: Personal frustration is product-market fit in its earliest, rawest form.

When Peter lost that message in the Vienna subway, he felt genuine rage. When he couldn’t find a good PDF solution, it bothered him personally. When he wanted an AI agent that could act on his behalf through messaging apps, he built it for himself first. He didn’t need to validate that he had the problem. He didn’t need to convince himself to care. He didn’t need motivation to keep iterating because he was still frustrated until it worked.

This is the paradox most founders miss: Your constraints aren’t obstacles to your breakthrough; they often are the breakthrough itself. PSPDFKit wasn’t born from ambition; it was born from a nine-month visa delay and abundant free time. OpenClaw emerged from burnout and a desire to reconnect with building. Both products came from moments when Peter couldn’t pursue the “obvious” path.

The tech industry glorifies vision, disruption, and scale. But Peter’s story suggests a different path: Start small. Start personal. Start with what pisses you off today.

What problem are you living with today that you’re uniquely positioned to solve?

We’d love to hear from you. Hit reply and share the frustrations you’re working through—your answer might inspire another founder, or you might discover you’re not alone in what you’re building.

Want to learn more? If we get 500 likes, we’ll invite Peter for a live FounderCoHo community interview. Help us get there, hit like and share!

If this story resonated with you, connect with the author Jing Conan Wang

Reference

PSPDFKit €100M Investment & Peter Steinberger’s Background

OpenClaw Security Vulnerabilities & CVE-2026-25253

https://thehackernews.com/2026/02/openclaw-bug-enables-one-click-remote.html

Malicious Skills in OpenClaw Ecosystem

https://blogs.cisco.com/ai/personal-ai-agents-like-openclaw-are-a-security-nightmare

Moltbook Launch & Viral Phenomenon

https://edition.cnn.com/2026/02/03/tech/moltbook-explainer-scli-intl

Peter Steinberger’s Return & OpenClaw Origin Story

https://www.panewslab.com/en/articles/b58b5897-8d1d-4bd3-a98e-a77fe3b4b315